How is DeepSeek different from ChatGPT (or others LLMs)?

Deep dive into DeepSeek models to understand how they are different from previous traditional LLMs models…

Hello everyone,

Everyone has been talking about DeepSeek for already several weeks! But if you never took the time to really understand it… now is the time! In this article we are going to deep dive into DeepSeek models and understand how they are different from previous traditional LLMs models…

The rise of AI: from rules to Chinese open source models

Artificial Intelligence has evolved dramatically over the decades from simple rules-based systems to the complex neural networks we see today!

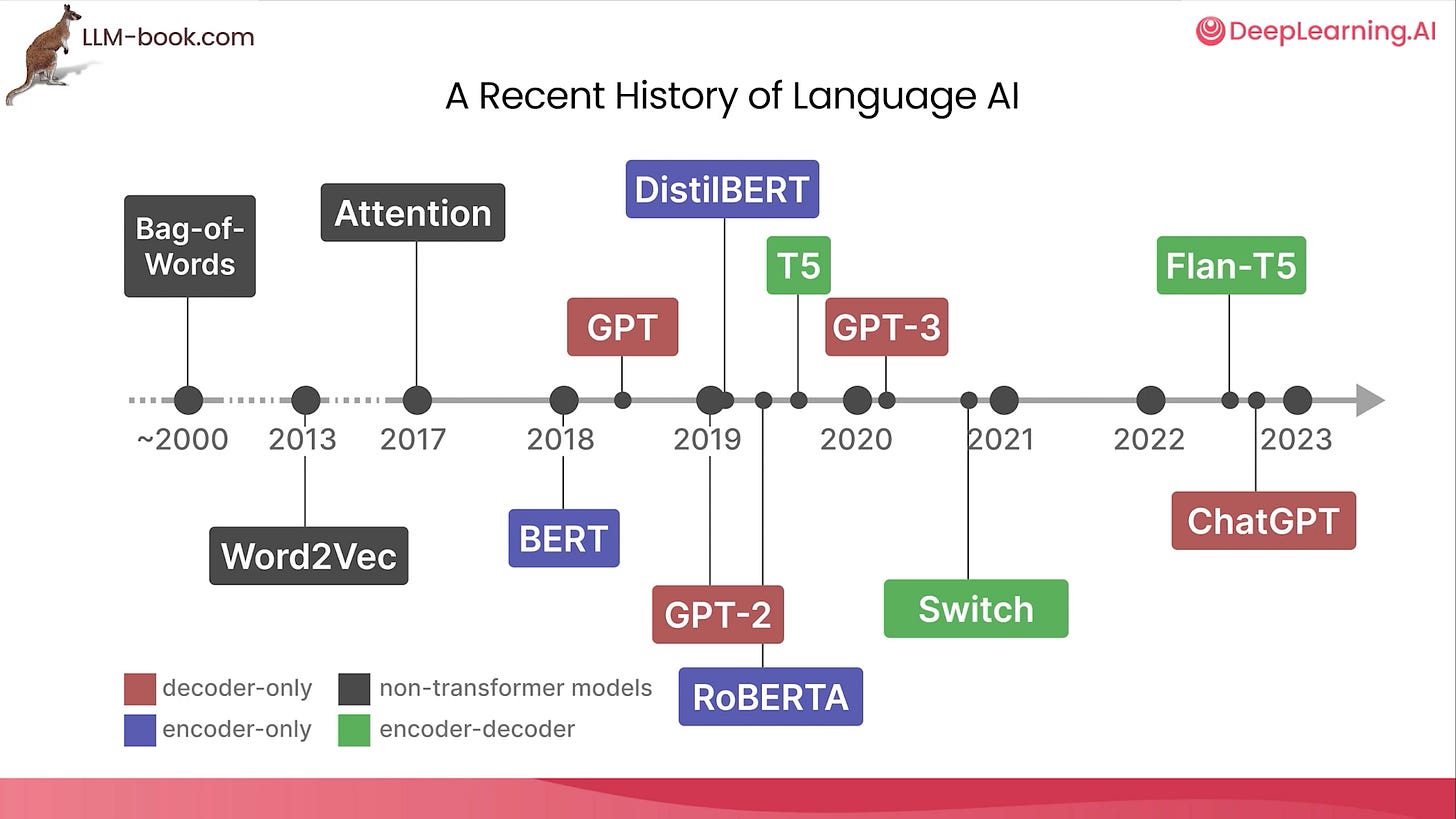

The history of language AI shows a remarkable evolution over the last two decades. Starting with simple Bag-of-Words models around 2000, the field progressed through Word2Vec (2013) until the revolutionary Attention mechanism emerged in 2017. This breakthrough enabled transformer architectures like BERT and GPT (2018-2019), which rapidly advanced with models like GPT-3 (2020). ChatGPT's release in 2022/2023 represented a milestone that brought conversational AI to the mainstream, transforming what was once academic research into powerful tools accessible to everyone.

While companies like OpenAI, Anthropic, and Microsoft developed powerful proprietary models, the open source community started creating alternatives like LLAMA and Mistral… and Chinese researchers also started to get more involved! Indeed, Open source Chinese models don't come out of nowhere! We've been seeing their models rise in the various benchmarks for quite a few months now….

At the end of 2024, the CEO of Hugging Face, a platform providing access to open source models, made a prediction that 2025 would be the year of the rise of open source Chinese models! This prediction seems to be coming true with models like DeepSeek, which are now achieving performance comparable to leading Western models like GPT-4o and o1.

We will deep dive into DeepSeek, but for that we first need to understand more bout textual generative AI models…

How is ChatGPT working? (and most other previous generative language models)

ChatGPT is an app that integrates generative AI models (GPTs). It works like a “smart” chatbot that “understands” and generates text based on what you type. Here's a simple breakdown of how it works:

Trained on Lots of Text: GPT models have learned from tons of books, articles, and conversations scraped in the internet to understand language and how people communicate.

Predicts the Next Word: when you type something, ChatGPT doesn’t "think" like a human. Instead, it predicts what the best response should be, based on patterns in the data it has seen before… And it does it word by word!

Context Awareness: it remembers what you just said in the conversation so that it can give relevant responses.

No Real Understanding: even though it sounds smart, ChatGPT doesn’t actually "know" things like a person. It just finds the most likely and useful words to continue the conversation.

Basically, it is like a really advanced “autocomplete” that can answer questions, write stories, help with coding, and more!

ℹ️ Small glossary:

GPT = Generative Pre-trained Transformer (the text generator AI model).

ChatGPT = the app built on top of GPT models to interact with it through a chat interface.

Neural network = a computing system inspired by the human brain that learns to perform tasks by analyzing examples rather than following programmed rules.

Decoder = the part of a generative AI system that transforms internal representations into human-readable output like text or images.

Inference = the process where an AI model uses its trained knowledge to generate predictions or outputs when given new input.

Prompt = the text input given to an AI system that guides or instructs it on what kind of response to generate.

Let’s know deep dive into its (simplified) architecture!

Non-technical overview of a decoder LLM transformer architecture

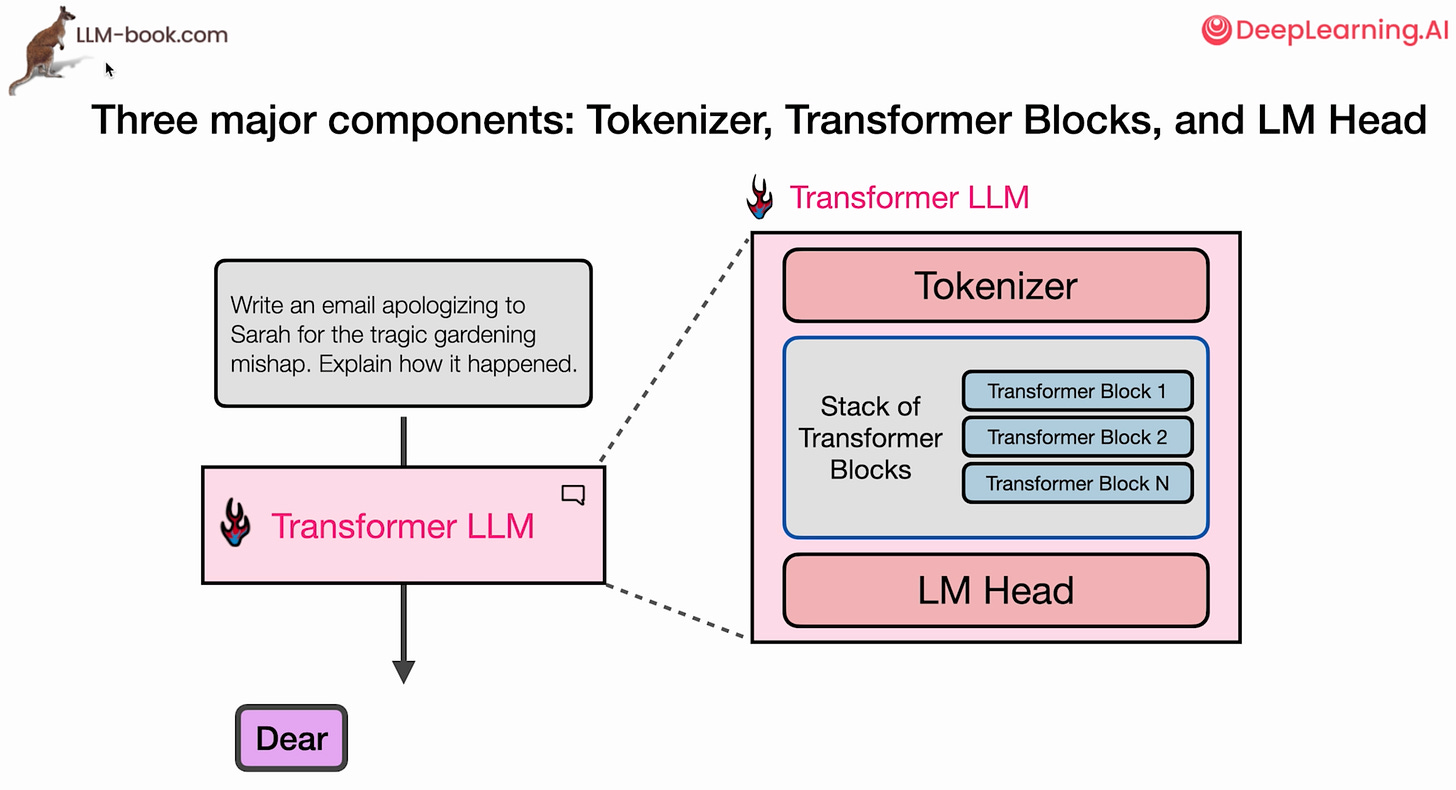

When you send a message (prompt) to a model like GPT, the message is first processed by a “tokenizer”, then by several “transformer blocs” and finally by the “Language Model Head” so you can receive the answer.

Let’s see how those 3 main blocks work!

1. Tokenizer

There are two steps on the tokenizer:

Tokenization ⇒ breaks down the text sent to him into smaller pieces (tokens) based on the words and symbols the tokenization model has seen in its building phase (it’s vocabulary).

Example:

“This is an article about DeepSeek” ⇒ “This” “is” “an” “art” “icle” “about” “Deep” “Seek”.

In this example, the tokenizer doesn’t know the word “article” but knows “art” and “icle” so it is broke down into 2 tokens, same for “DeepSeek”.

Embeddings ⇒ turns the language (the tokens) into numbers (vectors).

Example: the previously created tokens are turn into a series of numbers based on the model vocabulary ⇒ “This” “is” “an” “art” “icle” “about” “Deep” “Seek”

Token ID Token 0 ? 1 a 2 as 3 an … … 105 this … …⇒ [105, 325, 3, 12, 4543, 65, 543, 892].

Now that we have our text as vectors, they pass in the transformer blocks.

2. Transformer Blocks

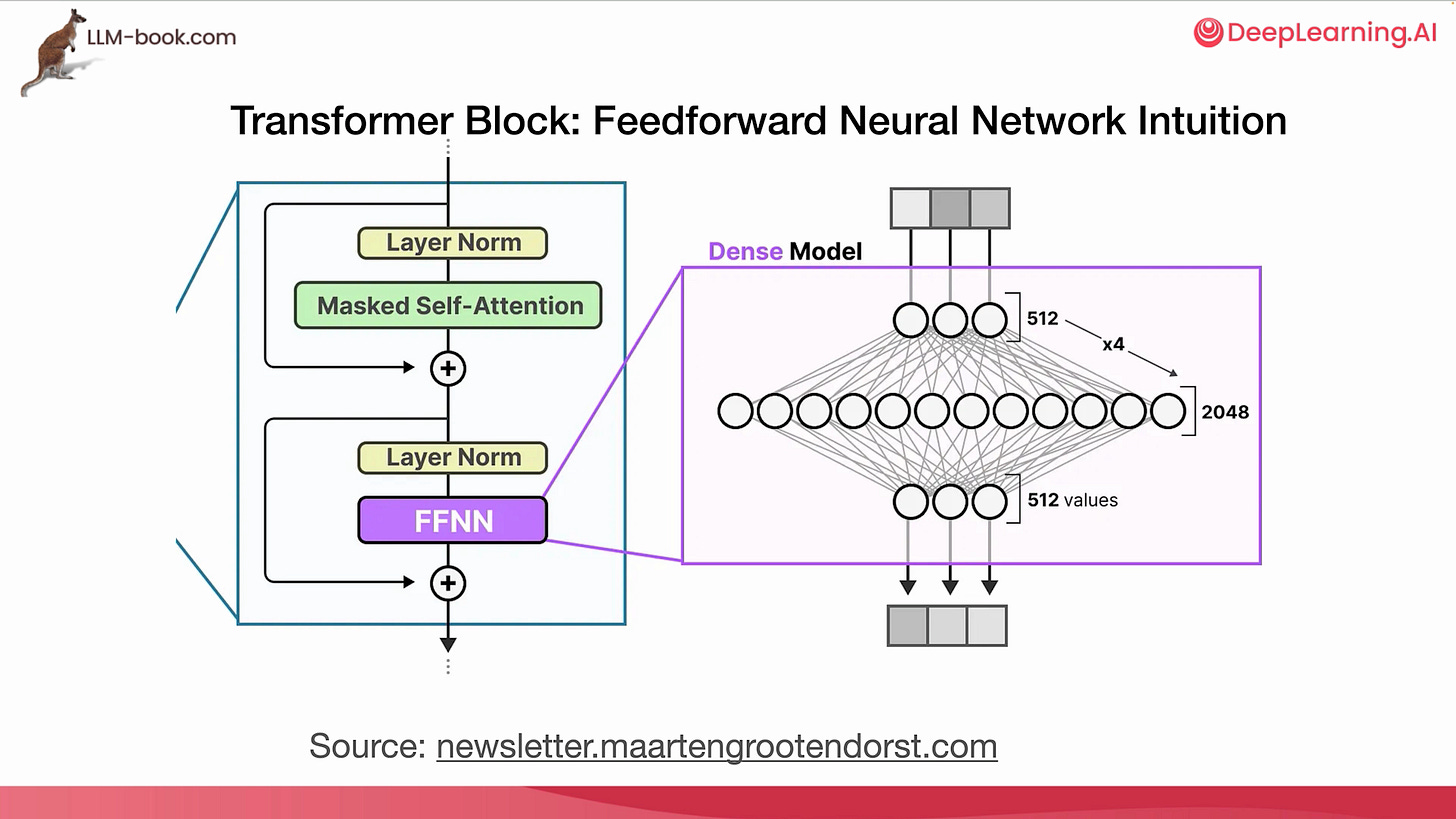

There are several layers of transformers — that are neural networks — composed of:

Masked Self-Attention: a first step that allows the model to attend to previous tokens and thus integrate the “context” in its understanding of the token it is currently looking at.

1st step ⇒ Relevance scoring : assign a score to how relevant each of the token are to the token we are currently processing.

2nd step ⇒ Combining information : combine the relevant tokens.

Feed Forward Neural Network :

Which captures statistical patterns about which words or concepts tend to follow others in various contexts.

It takes the relationships identified by the attention mechanism and develop more complex representations to determine “what these relationships mean” and “how to use this information”.

The information is then processed by the LM Head, the final step.

3. LM Head

The Language Model head is a Neural network that scores the best next token. It calculates the probability of each token of the model vocabulary to be the next one and determines which one is the most relevant (based on a rule that we tell it to apply like, for axample, the highest score).

Token ID Token Probability

0 ? 0,02%

1 a 0,01%

2 as 2,05%

3 an 5,60%

… … …

105 this 48,32%

… … …In this example (table), if you chose a strategy of the highest score, “this” would be the chosen token.

You have several decoding strategies, like choosing the highest score (greedy decoding) or add some randomness or “creativity” and make the text seems more natural.

ℹ️ For the people that already used the OpenAI API interface, you can change the temperature setting to modify this:

Temperature = 0 ⇒ always choose the highest score.

Temperature > 0 ⇒ add randomness.

Other key information

The processing of all the tokens and embeddings of the initial prompt is run in parallel to be faster. The model can process tokens in parrallel up to its maximum context size!

Each output token is generated one by one by the model:

The 1st generated token is the output last token of the prompt.

You then have a loop ⇒ the entire prompt + the previously generated token(s) are feed back into the transformer again to generate the next token!

The prompt tokens + the previously generated token(s) are cached by the model (saved in memory), so not to be recalculated for each next token of the answer.

Now that we saw the “traditional” architecture of LLMs before DeepSeek, let’s deep dive into DeepSeek!

What is DeepSeek?

DeepSeek is a Chinese Lab funded by the hedge fund High-Flyer that recently release a series of fully open-source LLM AI model: DeepSeek-R1-Zero, DeepSeek-R1… They also realsed models that are fine-tuned using other open source models of the LLAMA 3 series and the Qwen2.5 series. Some have argue is in the end more a victory for open source rather than China!

It is a direct competitor of models developed by OpenAI (GPTs), Meta, Microsoft, Anthropic or Mistral…

DeepSeek-R1 achieves a performance comparable to some leading models like OpenAI's GPT-4o and o1. Furthermore, DeepSeek claims to have achieved this results with only $6 million of training costs, much lower than the estimated $100 million for GPT-4, and using less and less advance GPUs for the training.

Researchers and experts refuted this number for the costs… but what we are sure is that China and DeepSeek had less GPUs and less advance ones that USA companies as the USA imposed a ban on such GPUs for China.

Also, the cost of running DeepSeek distilled models is much less than GPT models with equivalent performance.

So… How are DeepSeek models different?

So… How DeepSeek achieve those same level of performance with less computational resources needed for the training and the usage (inferences)?

Optimization of the code and data communication to the maximum

For the training part, they optimized their code and GPUs architecture to the maximum! ⇒

They used a lower-level language for programming Nvidia GPUs (PTX, instead of traditionnaly used CUDA) to enable fine-grained optimizations ⇒ this programming language is closer to the GPU core code (less abstracted). To use an analogy:

CUDA is like using a high-level recipe to cook a meal ⇒ it's easy to follow and works well for most people, but you don't control every tiny detail.

PTX is like manually adjusting the temperature, knife angles, and ingredient timing at a molecular level ⇒ harder to do, but it allows expert chefs to maximize efficiency and precision.

PTX is much harder to develop and maintain, which is why most people prefer to use CUDA.

They reconfigured the GPUs to speed up communication and workflows:

DeepSeek fine-tuned 132 processing units in its GPUs, setting aside 20 specifically for handling communication between servers to make data transfer faster and avoid slowdowns.

Analogy: imagine a restaurant kitchen with 132 chefs, but 20 of them are assigned only to delivering ingredients and orders between stations to keep everything running smoothly. They also optimized how chefs coordinate tasks, making them work more efficiently and reducing wasted time.

Chain-of-Thought for the reasoning

The model decompose its reasoning in step by step and explains it “out loud”:

Not new, as previous reasoning models were using it but hiding it!

This prompt technic improves results as it forces the model to “reason”.

It’s less of a black box as the model is explaining its thoughts while processing the prompt.

If the answer is wrong, we can more easily correct the prompt to have a good answer by identifying where it went wrong in its reflections.

ℹ️ DeepSeek team also used the chain-of-thought in the training phase for the model to self reflect on its answers and improve itself, as details after!

Reinforcement learning (with chain-of-thought)

DeepSeek is using reinforcement learning to improve the trained base LLM model (DeepSeek v3) to be better at reasoning!

The model learns on its own (like baby learn how to walk)!

It learns overtime which policies — that it is trying — maximize its given rewards (higher accuracy).

Here, the team used chain-of-thought reasoning to force the model to self-reflex and evaluate to change its behavior to get closer to the maximum reward.

Distillation

Distillation in AI is like teaching a student from a teacher’s notes instead of making them read the entire textbook. A big, complex AI model (the "teacher") is used to train a smaller, simpler model (the "student") by transferring its knowledge in a more efficient way. This makes the smaller model faster and easier to use while still being nearly as smart as the big one!

Here DeepSeek R1 was used to teach open source models (Qwen-1.5B, Qwen-7B, Llama-8B, Qwen-14B, Qwen-32B and Llama-70B).

The smaller model becomes as efficient as the big one, but cost much less to run!

Mixture of Experts

When the user is doing a request (inference), to the model, instead of using the full power / querying the entire model like GPT, it will only ask the relevant / specialized « experts » to answer the request (Mixture of Experts).

=> It save a lot of energy / cost!

ℹ️ By Experts here we are not talking about an expert specialized in maths and an other in biology ⇒ we are talking about experts on some tokens.

How were DeepSeek models developed?

To start, DeepSeek lab had been working on LLM models for a long time already. So, all new models are based on there previous last model: DeepSeek v3. Here is a simplified version of their approach:

Creation of DeepSeek R1 Zero by using reinforcement learning with chain of thoughts on DeepSeek v3.

Creation of a dataset of reasoning and non-reasoning samples by combining two datasets:

Reasoning dataset: generated by an upgraded version of DeepSeek v3, using finetuning with examples of DeepSeek R1 Zero and Reinforcement Learning with chain of thoughts, and supervised by DeepSeek v3 (the generation).

Non-reasoning dataset: from and “old” data of DeepSeek v3 development and generated data from DeepSeek v3 with chain of thougts prompting.

Creation of DeepSeek R1 using the previously created dataset supervised fine-tuning and reinforcement learning on DeepSeek v3.

Creation of the series of distilled R1 models using open source smaller models (Llama 3.3 and Qwen 2.5 series).

DeepSeek R1 is the teacher model.

The series of Llama 3.3 and Qwen 2.5 models are the student models.

Here is a more detailled (but still simplified) visualization the training pipeline:

Is DeepSeek a big thing?

Yes, DeepSeek is definitely a big thing in the AI landscape! Here's why:

Competitive Performance: It achieves results comparable to leading models like GPT-4o and o1, but with fewer resources.

Technical Innovation: Their approach combines optimized GPU usage (PTX instead of CUDA), chain-of-thought reasoning, and Mixture of Experts to create more efficient models.

Cost Efficiency: DeepSeek distilled models cost much less to run than GPT models with equivalent performance.

Open Source Victory: By releasing fully open-source models, DeepSeek contributes to democratizing advanced AI capabilities.

Despite hardware constraints from US export restrictions, DeepSeek shows that efficient engineering and innovative approaches can sometimes be as valuable as raw computational power. This is a win not just for China but for the entire open-source AI community!

Sources

DeepLearning AI: https://learn.deeplearning.ai/courses/how-transformer-llms-work/lesson/nfshb/introduction

Cybernetica: https://www.cybernetica.fr/deepseek-r1-un-recap-tech-et-business/

AI with Alex: video link

DeepLearning with Yacine: video link

Hugging Face: https://huggingface.co/blog/moe#what-is-a-mixture-of-experts-moe